I created a hand and gesture tracking algorithm that uses an Intel RealSense camera and a PincherX 100 robotic arm. The base goal of this project is to have the robot mimic actions that the user performs. This would include moving in the basic four directions, opening and closing the gripper and moving forward and backward.

Skills Used:

- OpenCV

- Python

- Manipulation

Design

The project has multiple components that build upon each other and work together at the end to produce the final product. The first component of this project is to get the color and depth data from the camera. These two streams of information are very important to detect the hand, position of the hand and how far away the hand is from the camera. Getting the distance of the hand from the camera was one of the biggest challenges that was faced.

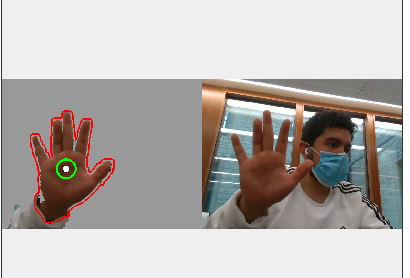

Using OpenCV, the hand coordinates were found by finding the coordiantes of the centroid of the hand. The hand was detected by creating a mask based on a HSV range, which matches that of the hand. The mask was used to find contours in the image that match the mask. The centroid of the contours was determined and this should match the shape of the hand.

The farthest point of the contour was determined by using convex hulls and defects from OpenCV. From this, a line was drawn between the center of the hand and the farthest point on the counter from the centroid. With this line, the distance between these two mains points are calculated and will be used to determine the gripper status, whether it is open or closed.

The arm is the PincherX 100 and has to be run with a Robot Operating Software line which follows “roslaunch interbotix_xsarm_control xsarm_control.launch robot_model:=px100”. This ensures the robot can be controlled through the script I created. The robot’s end effector will match the location of the centroid in the stream. If the centroid of the hand passes a certain vertical or horizontal distance boundary, the arm will change its joints so that the robotic arm8 matches that position. For example, moving your hand up will result in the robotic arm moving its end effector up as well. This is the same for the other main directions.

The groundwork for the gripper has already been set and all that is left is the implementation. Since depth is incorporated into the system, the distance between the center of hand and the index finger, for example, will vary greatly depending on the distance from the camera the hand is. If the distance between these two points is less than a threshold, then the gripper will close, and if the distance is greater than the threshold, open the gripper. To compensate for depth changing the distance between the centroid and the index finger, there are three zones with different thresholds. These zones are close to the camera, far away from the camera and in between. Additionally, if the hand of the user falls in the far away zone, the gripper moves towards the user, if the hand falls in the close zone, it moves away from the user and the arm will stay in its current depth if it’s in the inbetween zone.

Results

The robotic arm is able to track the user’s gestures accurately. If the user moves their hand in any direction, the robot will mimic this motion as well. This applies for vertical and horizontal movement (towards or away from the user) as well. The algorithm does pause when it detects a new gesture and this results in a not so smooth video stream but can be addressed for future work.